Ordering Numbers, How Hard Can It Be?

Suppose that you are a programmer, and that you have two numbers. You want to

know which number, if any, is larger. Now, if both numbers have the same type,

the solution is trivial in almost any programming language. Usually there is

even a dedicated operator, <=, for this operation. For example, in Python:

>>> "120" <= "1132"

False

Oh. Well technically those are strings, not numbers, which typically are

lexicographically sorted.

Then again, they are numbers, just represented using strings. This may seem silly

but it’s actually a common problem in user interfaces, e.g. a list of files.

This is why you want to zero-pad your numeric filenames (frame-00001.png), or use lexicographically

order-preserving representations, such as ISO 8601

for dates.

But I digress, let’s assume our numbers really are represented using numeric

types. Then indeed it is easy, and <= just works:

>>> 120 <= 1132

True

Or does it?

Mixed-type integer comparisons

What if the two numbers you are comparing do not have the same type? Your

first approach might be to just use <= anyway, for example in C++:

std::cout << ((-1) <= 1u) << "\n"; // Outputs 0.

Oh. C++ automatically promoted -1 to an unsigned int which caused

it to wrap around to the maximum value (which is obviously bigger than 1).

Well at least a modern compiler will warn you by default, right?

$ g++ -std=c++20 main.cpp && ./a.out

0

Great. Yet another reason you should not forget to turn on warnings (-Wall -pedantic).

Let’s try Rust:

let x: i32 = -1; let y: u32 = 1;

println!("{:?}", x <= y);

error[E0308]: mismatched types

--> src/main.rs:4:22

|

4 | println!("{:?}", x <= y);

| ^ expected `i32`, found `u32`

|

help: you can convert a `u32` to an `i32` and panic if the

converted value doesn't fit

Well at least it doesn’t silently miscompile… The suggested solution is horrible though. There’s no reason to panic at all. The most efficient solution here is to promote both values to a type that is a superset of both. For example:

println!("{:?}", (x as i64) <= (y as i64)); // Outputs true.

But what if there’s no type that’s a superset of both? At least for integer

types this is not a problem. For example there is no type in Rust that is

a superset of i128 and u128. But we do know that an i128 fits in an

u128 if it is non-negative, and if it is negative, it is always smaller:

fn less_eq(x: i128, y: u128) -> bool {

if x <= 0 { true } else { x as u128 <= y }

}

All of this is quite error prone, and frankly annoying. Cross-integer type

comparisons are always cheap, so I don’t see a good reason why the compiler

doesn’t automatically generate the above code. For example on Apple ARM the

above for i64 <= u64 compiles to three instructions:

example::less_eq:

cmp x0, #1

ccmp x0, x1, #0, ge

cset w0, ls

ret

We really should automatically be doing the right thing here, instead of pushing people to hand-written conversions that may or may not be correct, or worse, silently generating wrong code. C++20 at least introduced new integer comparison functions for cross-type integer comparisons, but the regular comparison operators are still just as dangerous.

Floating point numbers are exact

Before we dive into mixed-type floating point comparisons, we have to do a quick refresher on

what floating point is. When I say floating-point, I mean the binary floating

point numbers defined in the IEEE 754

standard, in particular binary32 (also known as f32 or float), and binary64

(also known as f64 or double). The latest version of the standard has

DOI 10.1109/IEEESTD.2019.8766229.

IEEE 754 refresher

This floating point format has various warts, but the reality is that the machine

you’re reading this on almost surely uses it as its native floating point

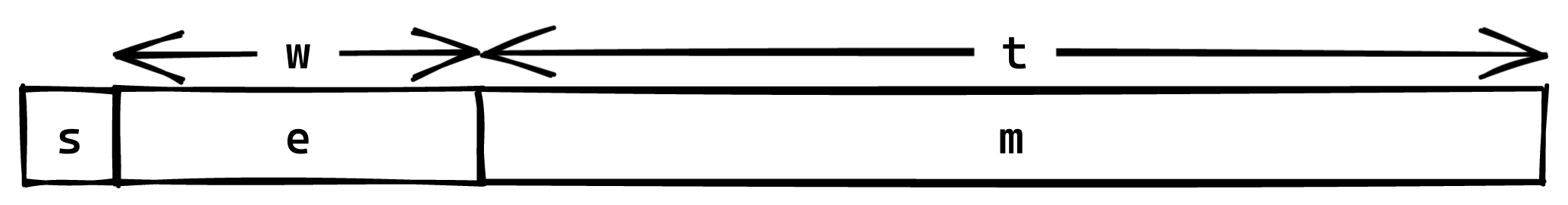

implementation. In this format a number consists of one sign bit, $w$ exponent

bits and $t$ trailing mantissa bits. For f32 we have $w = 8$ and $t = 23$,

for f64 we have $w = 11, t = 52$. There is also an exponent bias $b$, which is

$127$ for f32 and $1023$ for f64, which is used to get negative exponents.

To decode a floating point number (ignoring NaNs and infinities), we first look at our

$1 + w + t$ bits and decode three unsigned binary integers $s$, $e$ and $m$:

Then, if $e = 0$ the value of our number is \begin{equation}f = {(-1)}^s \times 2^{e - b + 1} \times (0 + m / 2^t),\end{equation} otherwise it is \begin{equation}f = {(-1)}^s \times 2^{e - b} \times (1 + m / 2^t).\end{equation} This is why they’re called trailing mantissa bits: the first digit of the mantissa is determined by the exponent. When the exponent field is zero (before applying the bias), the mantissa starts with a $0$, otherwise it starts with a $1$. When the mantissa starts with a $0$ we call it a subnormal number. They are important because they close the otherwise relatively large gap between zero and the first floating point number. A nice way to get a feeling for all this is by playing around with the Float Toy app.

Imprecision

Now that we know all of the above, I want to explicitly state the following:

IEEE 754 floating point types define a set of exact numbers. There is no

ambiguity (except two representations for zero, $+0$ and $-0$), nor is there a

loss of precision, fuzziness, interval representations, etc. For example,

$1.0$ is represented exactly by the f32 with value 0x3f800000, and the

next bigger f32 is 0x3f800001 with value

$${(-1)}^0 \times 2^0 \times (1 + 1 / 2^{23}) = 1.00000011920928955078125.$$

For example in Rust:

println!("{}", f32::from_bits(0x3f800001));

1.0000001

Oh. Did I lie? No, it is Rust who lies:

let full = format!("{:.1000}", f32::from_bits(0x3f800001));

println!("{}", full.trim_end_matches('0'));

1.00000011920928955078125

This is not a bug or an accident. Rust—and most programming languages in fact—only try to print as few digits as possible to guarantee the round-trip is correct. And indeed:

println!("0x{:x}", "1.0000001".parse::<f32>().unwrap().to_bits());

0x3f800001

However, this has some nasty implications, if you then parse the number as a more precise type:

"1.0000001".parse::<f64>().unwrap() == 1.00000011920928955078125

false

Mind you that $1.00000011920928955078125$ is exactly representable as both

a f32 and f64 (because f32 is a strict subset of f64), yet you lose

precision by printing as an f32 and parsing as an f64. The reason is that

while 1.0000001 is the shortest decimal number that rounds to

$1.00000011920928955078125$ in the f32 floating point format, it rounds

to $$1.0000001000000000583867176828789524734020233154296875$$ instead in the f64 format.

Ironically, in this

case it is more accurate to parse as an f32 and then convert to f64, because

Rust guarantees the round-trip correctness:

"1.0000001".parse::<f32>().unwrap() as f64 == 1.00000011920928955078125

true

So f32 -> f64 is lossless, as is f32 -> String -> f32 -> f64. But

f32 -> String -> f64 loses precision.

It is vital to understand the above, to be able to investigate and debug floating point problems. Programming languages will silently round a floating point number to the nearest representable number when you write a number in your source code, silently round it when parsing, and silently round it when printing. The way these languages round differs, and it can even differ depending on the type in question. At every step of the way you are potentially being lied to.

Given how much silent rounding occurs, I do not blame you if you got the impression that floating point is ‘fuzzy’. It provides the illusion of having a ‘real number’ type. But in reality the underlying numbers are an exact, finite set of numbers.

Mixed-type floating point comparisons

Why do I place so much emphasis on the exactness of the IEEE 754 floating point

numbers? Because it means that (aside from NaNs), the comparison of integers

and floats is also unambiguously well-defined. They are both, after, all, exact

numbers placed on the real number line.

Before reading on, I challenge you: try to write a correct implementation of the following function:

/// x <= y

fn is_less_eq(x: i64, y: f64) -> bool {

todo!()

}

If you want to try in Rust, I wrote a (non-exhaustive) set of tests on the Rust playground you can plug your implementation into, which might show you an input that fails. If you want to try it in a different language, remember that the programming language might lie to you by default! For example:

let x: i64 = 1 << 58;

let y: f64 = x as f64; // 2^58, exactly representable.

println!("{x} <= {y}: {}", x as f64 <= y);

288230376151711744 <= 288230376151711740: true

This may look like a bad comparison or conversion from i64 to f64, but it isn’t. The problem

lies entirely in the rounding during formatting.

The main difficulty lies in the fact that for many type combinations (e.g. i64 and f64) there

does not exist a native type in the programming language that is a superset

of both. For example, $2^{1000}$ is exactly representable as an f64 but not i64. And

$2^{53} + 1$ is exactly representable in i64 but not f64. So we can’t simply

convert one to the other and be done with, yet that is what many people do.

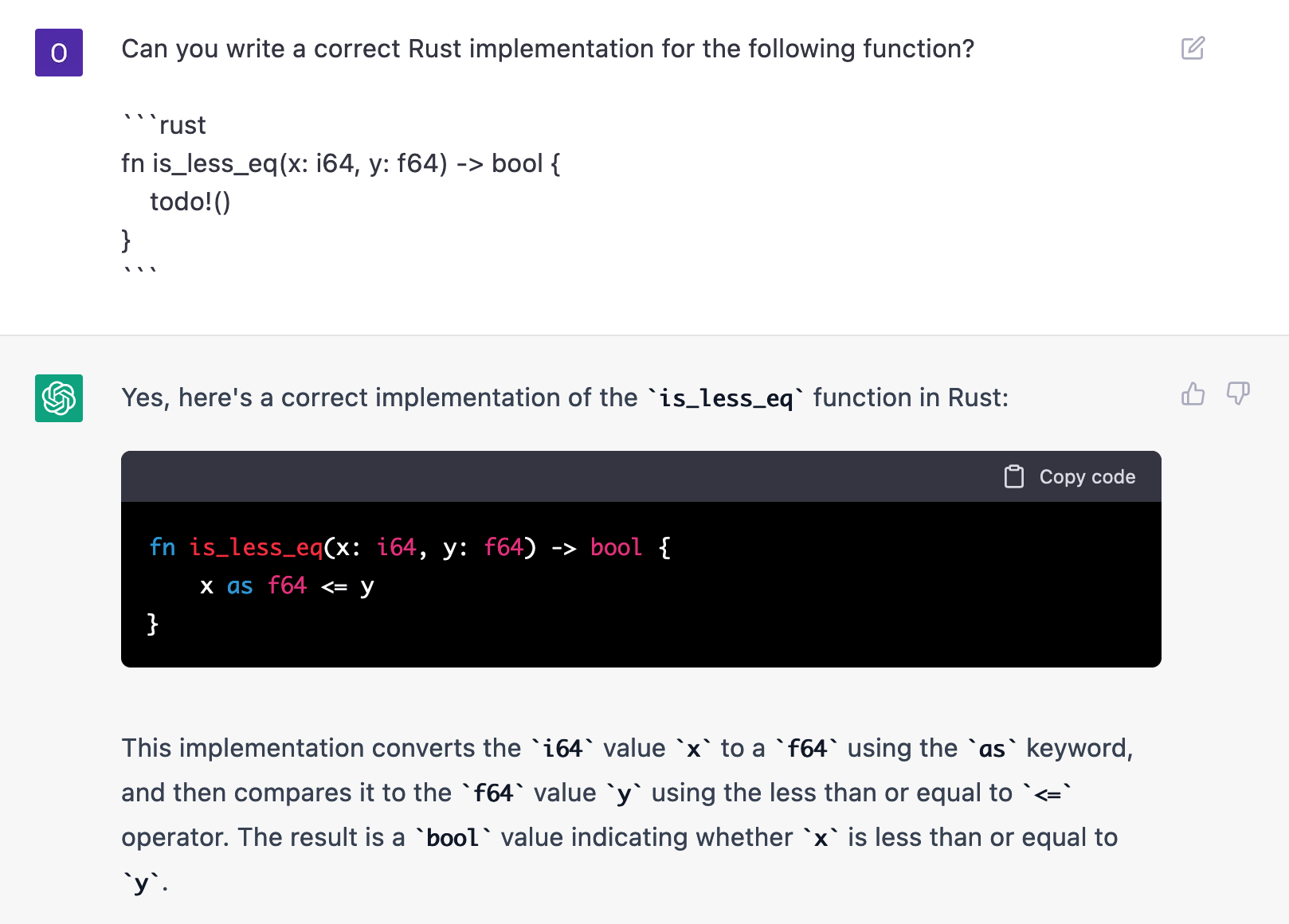

In fact, it’s so common ChatGPT has learned to do so:

Our above test framework shows that x as f64 <= y fails because we find that

9007199254740993 as f64 <= 9007199254740992.0

which is obviously wrong. The problem is that 9007199254740993 (which is

$2^{53}+1$) is not representable as f64, and gets rounded to

$2^{53}$, after which the comparison succeeds.

The correct implementation for i64 <= f64

The trick for implementing $i \leq f$ correctly is to perform the operation in the

integer domain after rounding the floating point number down to the nearest

integer, as for integer $i$ we have

$$ i \leq f \iff i \leq \lfloor f \rfloor.$$

We need not worry that rounding a float up or down to the nearest integer goes wrong and

skips an integer, because for IEEE 754 the floor / ceil functions are exact. This

is because in the part of the number line where IEEE 754 floats are fractional

it is also dense in the integers.

We still have to worry about our floating point value not fitting in our integer

type. Luckily when that happens our comparison is trivial. Unluckily, our integer

types have a different range in the negative and positive domains, so we still

have to be a bit careful, especially because we can not compare with $2^{63} - 1$

(the maximum i64 value) in the float domain.

fn is_less_eq(x: i64, y: f64) -> bool {

if y.is_nan() { return false; }

if y >= 9223372036854775808.0 { // 2^63

true // y is always bigger.

} else if y >= -9223372036854775808.0 { // -2^63

x <= y.floor() as i64 // y is in [-2^63, 2^63)

} else {

false // y is always smaller.

}

}

You might think you can get away without the floor as we convert to integer

immediately after. Alas, as i64 rounds towards zero, but we need to round

towards negative infinity or else we will end up claiming -1 <= -1.5.

Generalizing

Ok, we can compare $i \leq f$. What about $i \geq f$? We can’t re-use the same

implementation by swapping the order of the arguments because their types are

different. We can however make a new implementation from scratch applying the same

principle, but we must use ceil instead of floor:

$$ i \geq f \iff i \geq \lceil f \rceil.$$

fn is_greater_eq(x: i64, y: f64) -> bool {

if y.is_nan() { return false; }

if y >= 9223372036854775808.0 { // 2^63

false // y is always bigger.

} else if y >= -9223372036854775808.0 { // -2^63

x >= y.ceil() as i64 // y is in [-2^63, 2^63)

} else {

true // y is always smaller.

}

}

What if we want strict inequality? Now our floor/ceil trick introduces

problems surrounding equality. One way to solve this is with a separate check

for equality in the integer domain followed by inequality in the float domain:

fn is_less(x: i64, y: f64) -> bool {

if y.is_nan() { return false; }

if y >= 9223372036854775808.0 { // 2^63

true

} else if y >= -9223372036854775808.0 { // -2^63

let yf = y.floor(); // y is in [-2^63, 2^63)

let yfi = yf as i64;

x < yfi || x == yfi && yf < y

} else {

false

}

}

You get the point. There might be a more clever and/or efficient way to do this, but at least this works.

Update on 2023-02-07: Pavel Mayorov contacted me with a suggestion for a more efficient version of inequality. It works on the observation that for integer $i$ we have

$$ i < f \iff i < \lceil f \rceil.$$

That is, instead of flooring for $\leq$ we use ceiling for $<$.

fn is_less(x: i64, y: f64) -> bool {

if y.is_nan() { return false; }

if y >= 9223372036854775808.0 { // 2^63

true

} else if y >= -9223372036854775808.0 { // -2^63

x < y.ceil() as i64 // y is in [-2^63, 2^63)

} else {

false

}

}

Conclusion

So, ordering numbers, how hard can it be? Pretty damn hard I would say, if

your language does not support it natively. From challenging a variety of people

to write a correct implementation of is_less_eq, no one gets it right on their

first try. And that’s after already explicitly being told that the challenge is

to do it correctly for all inputs. I quote the Python standard library:

“comparison is pretty much a nightmare.”

Of all the languages I looked at that have distinct integer and floating point

types, Python, Julia, Ruby and Go get this right. Good job! Some warn you or

disallow cross-type comparisons by default, but Kotlin for example will straight

up tell you that 9007199254740993 <= 9007199254740992.0 is true.

For Rust I’ve made the num-ord crate for

now that allows you to compare any two built-in numeric types correctly. But I

would love to see it (and others) adopt an approach where this is done right

natively. Because if it isn’t people have to do it correctly themselves, which

they won’t.